本文介绍了 Warm-up + CosineAnnealingLR、Warm-up + ExponentialLR 和 Warm-up + StepLR 三种学习率调度器,并给出了使用模板。

介绍自己 🙈

生成本文简介 👋

推荐相关文章 📖

前往主页 🏠

前往爱发电购买

Pytorch 自用 Scheduler 分享

小嗷犬Scheduler

Warm-up + CosineAnnealingLR

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| import math

warm_up_iter = 10

T_max = 50

lr_max = 0.1

lr_min = 1e-5

def WarmupCosineAnnealingLR(cur_iter):

if cur_iter < warm_up_iter:

return (lr_max - lr_min) * (cur_iter / warm_up_iter) + lr_min

else:

return lr_min + 0.5 * (lr_max - lr_min) * (

1 + math.cos((cur_iter - warm_up_iter) / (T_max - warm_up_iter) * math.pi)

)

|

前 warm_up_iter 步,学习率从 lr_min 线性增加到 lr_max;后 T_max - warm_up_iter 步,学习率按照余弦退火从 lr_max 降到 lr_min。

Warm-up + ExponentialLR

1

2

3

4

5

6

7

8

9

10

11

12

13

| import math

warm_up_iter = 10

T_max = 50

lr_max = 0.1

lr_min = 1e-5

def WarmupExponentialLR(cur_iter):

gamma = math.exp(math.log(lr_min / lr_max) / (T_max - warm_up_iter))

if cur_iter < warm_up_iter:

return (lr_max - lr_min) * (cur_iter / warm_up_iter) + lr_min

else:

return lr_max * gamma ** (cur_iter - warm_up_iter)

|

前 warm_up_iter 步,学习率从 lr_min 线性增加到 lr_max;后 T_max - warm_up_iter 步,学习率按照指数衰减从 lr_max 降到 lr_min。

Warm-up + StepLR

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| import math

warm_up_iter = 10

T_max = 50

lr_max = 0.1

lr_min = 1e-5

step_size = 3

def WarmupStepLR(cur_iter):

gamma = math.exp(math.log(lr_min / lr_max) / ((T_max - warm_up_iter) // step_size))

if cur_iter < warm_up_iter:

return (lr_max - lr_min) * (cur_iter / warm_up_iter) + lr_min

else:

return lr_max * gamma ** ((cur_iter - warm_up_iter) // step_size)

|

前 warm_up_iter 步,学习率从 lr_min 线性增加到 lr_max;后 T_max - warm_up_iter 步,学习率按照指数衰减从 lr_max 降到 lr_min,每 step_size 步衰减一次。

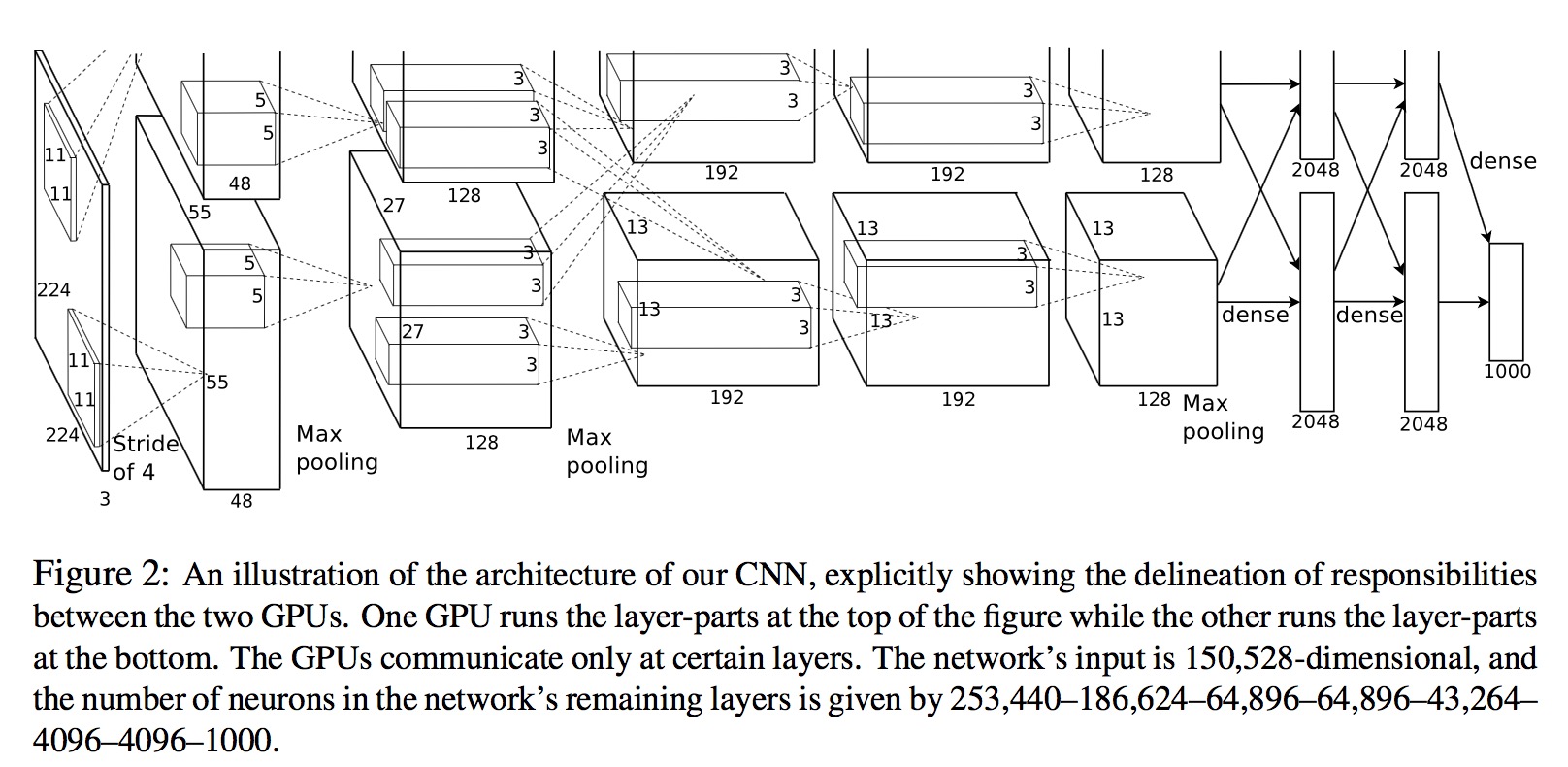

可视化对比

Pytorch 模板

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

| import math

import torch

from torch import nn, optim

from torch.optim.lr_scheduler import LambdaLR

warm_up_iter = 10

T_max = 50

lr_max = 0.1

lr_min = 1e-5

def WarmupCosineAnnealingLR(cur_iter):

if cur_iter < warm_up_iter:

return (lr_max - lr_min) * (cur_iter / warm_up_iter) + lr_min

else:

return lr_min + 0.5 * (lr_max - lr_min) * (

1 + math.cos((cur_iter - warm_up_iter) / (T_max - warm_up_iter) * math.pi)

)

model = nn.Linear(1, 1)

optimizer = optim.AdamW(model.parameters(), lr=0.1)

scheduler = LambdaLR(optimizer, lr_lambda=WarmupCosineAnnealingLR)

for epoch in range(T_max):

train(...)

valid(...)

scheduler.step()

|